Prompting 101

In this guide, we’ll walk you through our suggested prompting workflow for V7 Go projects and give you a handful of tips and tricks for prompt engineering so you can get accurate results quickly.

Prompting for accuracy

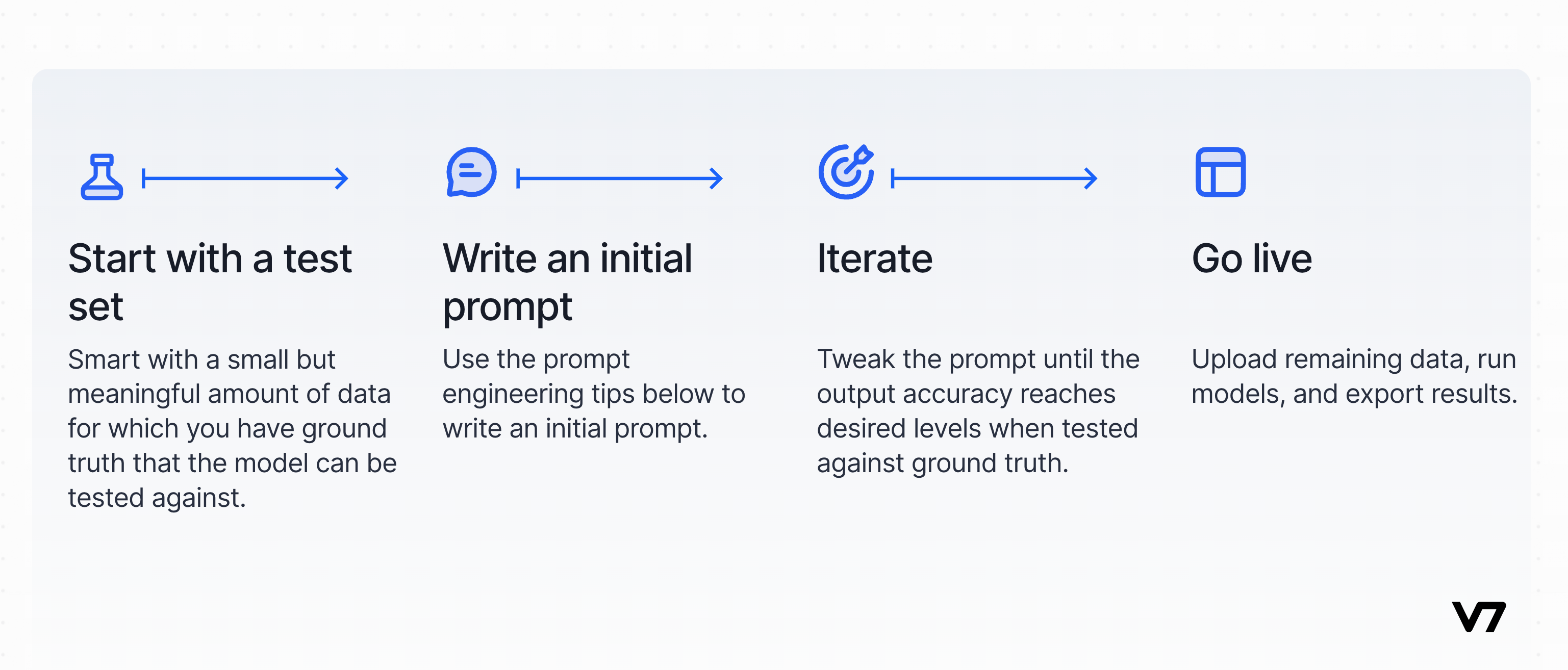

As fun as using LLMs can be, getting them to behave at scale can be a frustrating experience. In order to get consistent, accurate results from your AI Tools, we recommend using the framework below when starting any new project:

- Start with a test set of data for which you have ground truth

- Write your initial prompt

- Iterate until you’ve hit your desired accuracy level

- Run your AI Tools at scale

Prompt engineering tips and tricks

- Clarity and specificity: Be as clear and specific as possible. Vague or ambiguous prompts can lead to unpredictable or irrelevant outputs. Specify the desired format, style, and content clearly.

- Brevity with Detail: Strive for a balance between brevity and necessary detail. Overly lengthy prompts can confuse the model, but too little information might not guide it effectively. Don’t forget that you can use ChatGPT itself to find the right balance - plug your prompt in and ask for suggestions.

- Break down large prompts into steps: Wherever possible, create separate properties with individual, brief prompts, rather than a single property with one overly lengthy prompt. Prompt chaining will increase the ease with which you can iterate within a project and increase accuracy.

- Ask the AI Tool to adopt a persona: For tasks requiring domain-specific knowledge, ask an AI Tool to adopt the persona of an expert (for example, “You are an expert technical documentation writer”).

- Specify the output format: Specify the format in which you expect to receive results in the prompt. Use single-select or multi-select types to force an AI Tool to select from a pre-defined list of outputs, or use JSON and add a desired output format to your prompt.

- Use examples: To ensure consistency across a large number of outputs, add an example output indicating how outputs should be structured and phrased.

- Provide contextual information: Provide relevant context. For instance, if you're asking about a niche topic, a brief explanation or background can help the model understand and respond accurately.

- Stay updated: AI models and capabilities are constantly evolving. Keep yourself updated on the latest developments and best practices in prompt engineering. Go straight to the source and check out documentation written by the makers of the different AI Tools available in V7 Go, like OpenAI’s prompt engineering guide , or if you prefer videos to text, Anthropic's prompt engineering deep dive.

Updated over 1 year ago