AI Citations

AI Citations, powered by visual grounding, allow you to see exactly where a model has drawn its reasoning from.

Why did we build this?

One of the main challenges of building with LLMs is robustness, reliability, and trust. How can you trust an answer that has come out of a black box?

AI citations, powered by visual grounding, solve for this by highlighting AI citations from a source file to show you exactly where the model has drawn its reasoning from. It's the LLM equivalent of 'showing your working'.

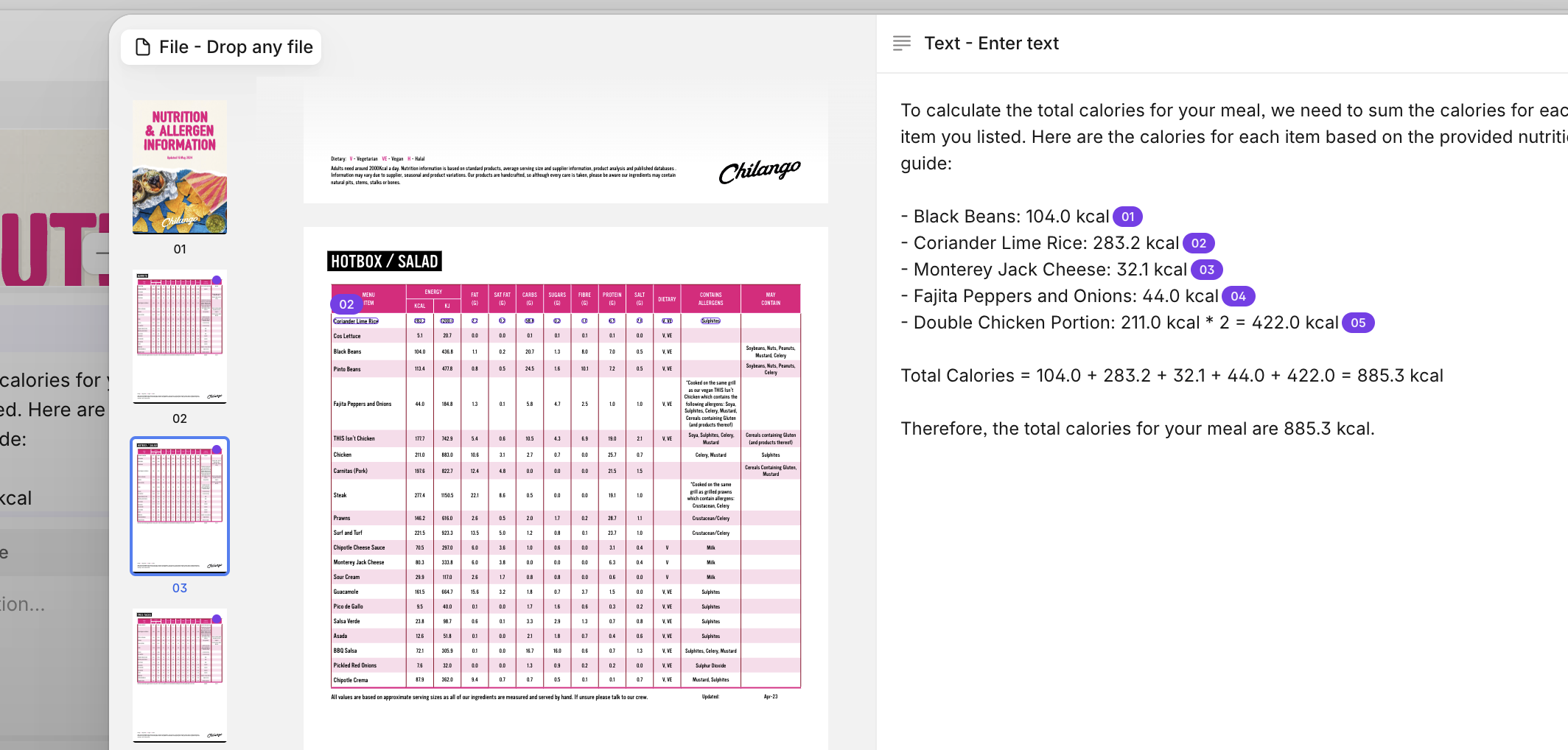

As you can see above, AI Citations in Go pinpoint the exact claims that an LLM used to calculate the calories in someone's lunch from a large, dense PDF.

You can enable AI Citations by toggling it on within the Property itself (see the AI citations option below).

You can then click the Lightbulb icon from the relevant section within the Entity or Table view.

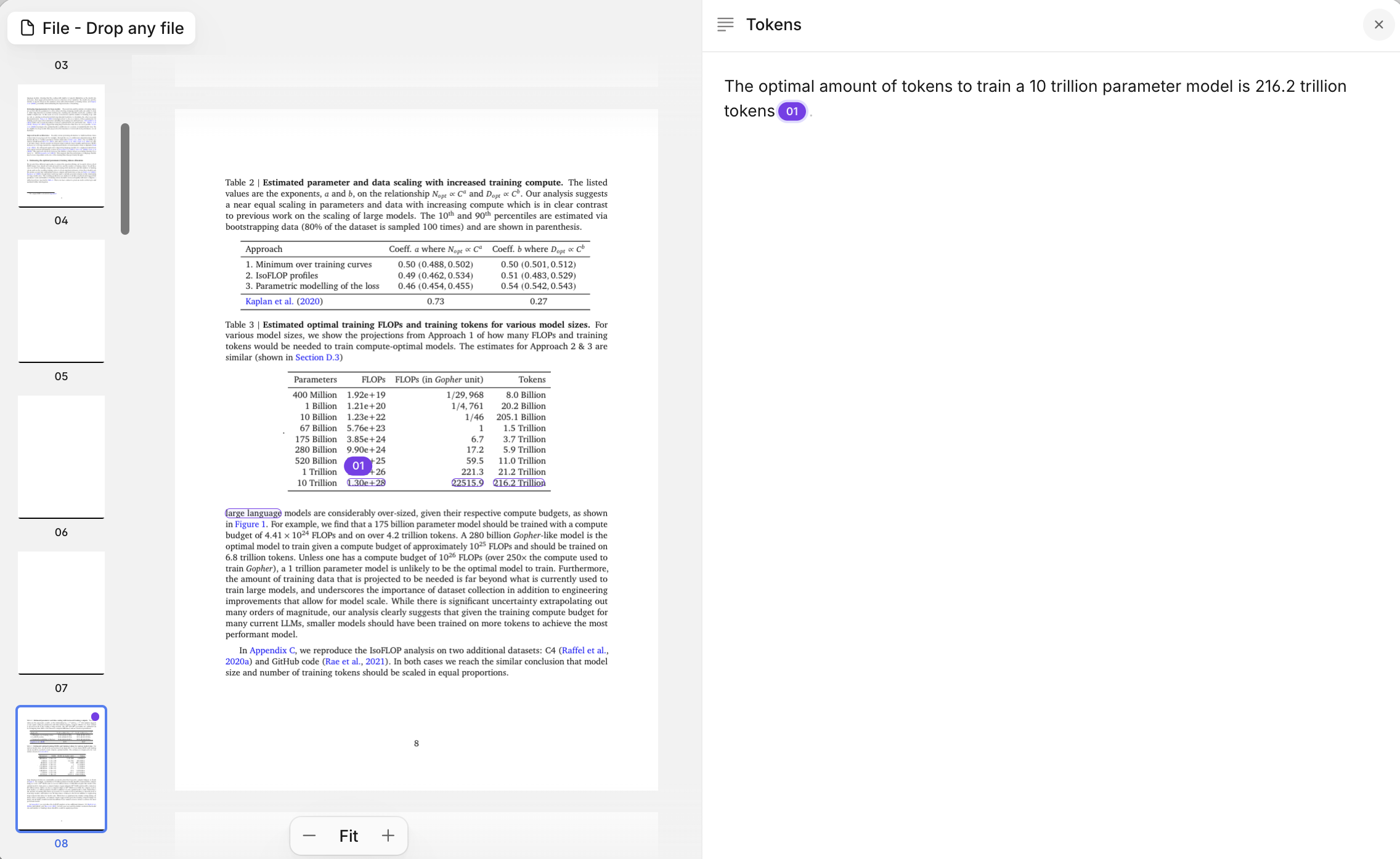

Users can click on the purple numbered icons to snap to the correct claim in the source, cutting QA and human review from hours to seconds. In the example below, clicking 01 jumps to the relevant row in a table on page 8.

The longer the document - and the more integral human review is for your workflow - the more powerful this feature is.

FAQs

- What are the cost/token implications of grounding?

- A grounded property costs ~20% more than a non-grounded property. However, the act of grounding itself may improve the LLM’s accuracy.

- What input and output property types does AI Citations work with?

- Input = PDF

- Output = Text, JSON, Numbers, URLs, Single Selects, Multi-Selects, and Collections

Updated about 1 month ago