Bring Your Own Model

Configure Go to leverage models hosted in your own Accounts

The default models available in Go are served by V7's account with the respective model provider (OpenAI/Anthropic/Google, etc).

Some users may want to instead integrate models hosted with their own cloud providers (e.g. Azure). This guide explains how to set them up.

Important: if you want to use only your own models/API keys, make sure that you configure a key for Azure OCR, as all files in Go are OCRed on upload.

You can configure which models are available to work with from the /settings/ai-models page within the UI.

Important! The naming standards provided below are required as they are used in the requests made from Go. If the configuration does not match, Go will not be able to successfully call the models.

IP Addresses

If you need to update firewall rules to allow Go traffic, the IPs are

- 18.202.82.120

- 54.75.198.141

Azure hosted Open AI GPT models

For general setup, please follow the Azure documentation.

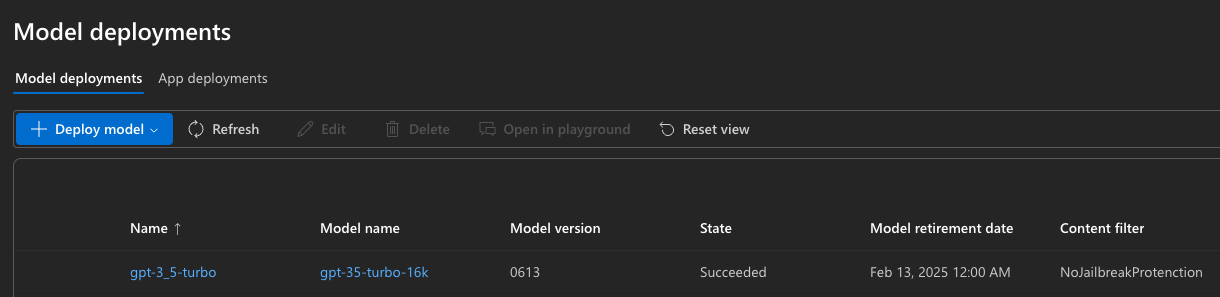

The required naming standard in the below table is configured via Azure Open AI Service within the Deployments tab.

| Name | Model Name | Model version |

|---|---|---|

| gpt-3_5-turbo | gpt-35-turbo-16k | 0613 |

| gpt-4o | gpt-4o | 2024-08-06 |

It is recommended that Content filter is set to NoJailbreakProtenction.

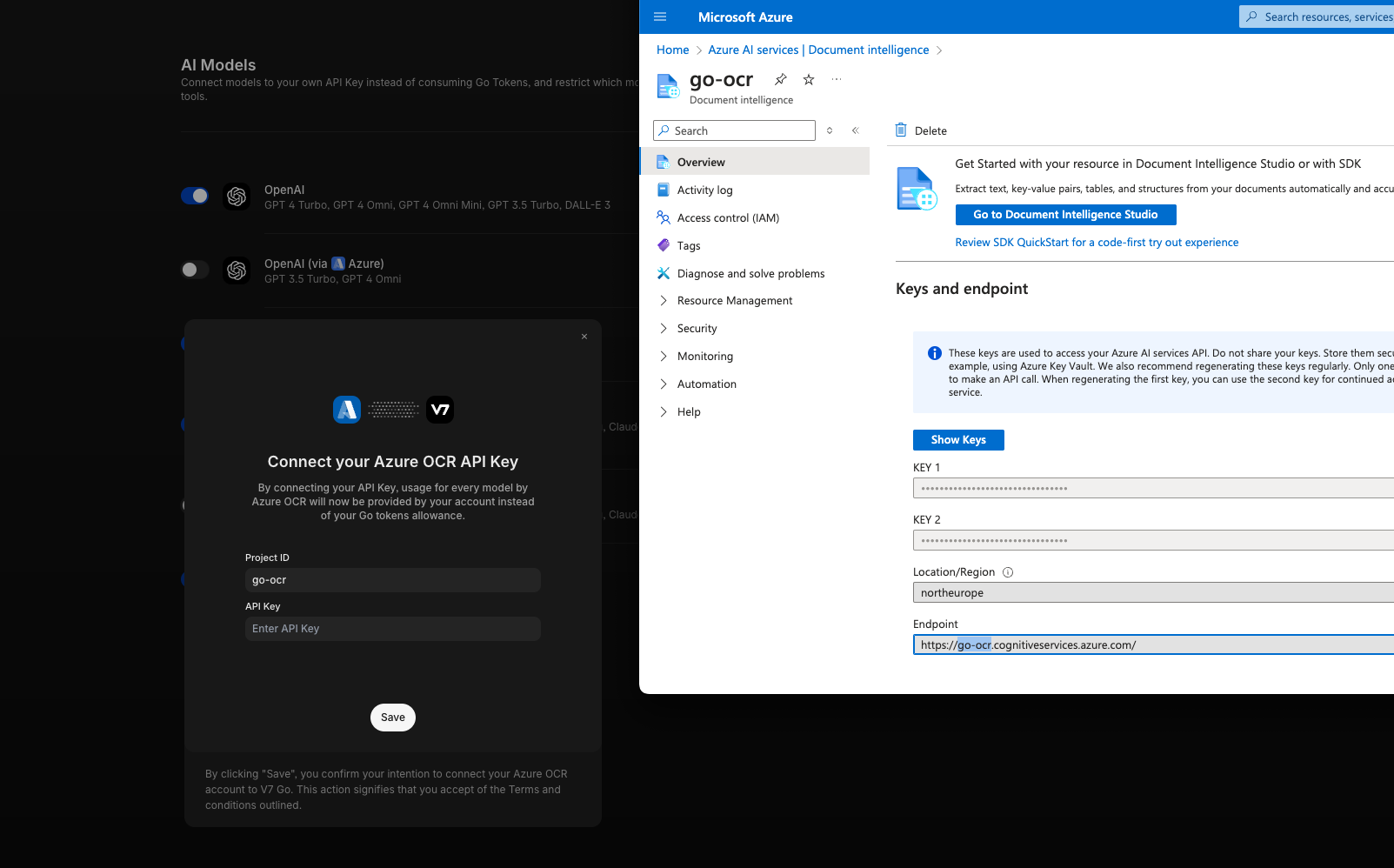

Azure OCR

Enable under Azure AI Service | Document intelligence.

In the Endpoint field, there will be a value in the form https://go-ocr.cognitiveservices.azure.com/. In this case go-ocr is the Project ID to provide in the Go configuration.

If you have any questions, reach out to [email protected].

Updated 11 months ago